Docker is an open-source platform for managing container lifecycles. Containers are portable, efficient components that operate in a virtualized environment. The Docker company partners with cloud service providers and vendors like Red Hat and Microsoft to develop containerization technologies.

Docker is an open-source platform for managing container lifecycles. Containers are portable, efficient components that operate in a virtualized environment. The Docker company partners with cloud service providers and vendors like Red Hat and Microsoft to develop containerization technologies.

Virtualization is an essential part of today's IT landscape. It's heavily utilized on-premises and is the foundation of the cloud. There are two main types of virtualization:

- Virtual machines: Virtualization at the hardware layer, with a defined virtual machine configuration, an installed operating system and supporting applications and services.

- Containers: Virtualization at the operating system layer, with a portable structure containing the application and any supporting libraries.

Docker is a great way to get started with containers. The website has strong documentation, and the Docker engine (which supports the containers) is available for the common Linux, Windows and macOS platforms.

Cloud environments also support containers, so you can deploy your containerized applications to AWS, Azure, GCP or the service provider of your choice.

Docker's Importance in IT

Containerization itself brings many benefits to the organization, including return on investment (ROI), efficiency, standardization, scalability, simplicity and portability.

Docker specifically brings its own strengths to the table, including:

- Support by all major cloud service providers

- Cross-platform options for Linux, macOS and Windows

- Straightforward syntax

- Consistent, repeatable results

Docker can be a great option, but it is not always the right tool for the job. It's heavily oriented on developers and "portability" can have a limited meaning.

Here are a few considerations while deciding whether Docker containers are the right tools for your job:

- It is difficult to integrate a graphical interface, so it doesn't suit graphics-based applications.

- Apps are isolated from each other within containers, but they all share the same OS, so there is a potential risk at that layer.

- Docker runs as a daemon with root privileges, so if a process breaks out it does so as root—very dangerous.

- Storage and networking may be challenging to configure for some users.

There is little to no ability to share containers natively between Linux, macOS and Windows, and you may have issues between Linux distros. While Docker documentation correctly states that Docker containers run on Windows and macOS, the reality is that Docker (and the containers) run in a virtual machine on these two platforms. That's not native support.

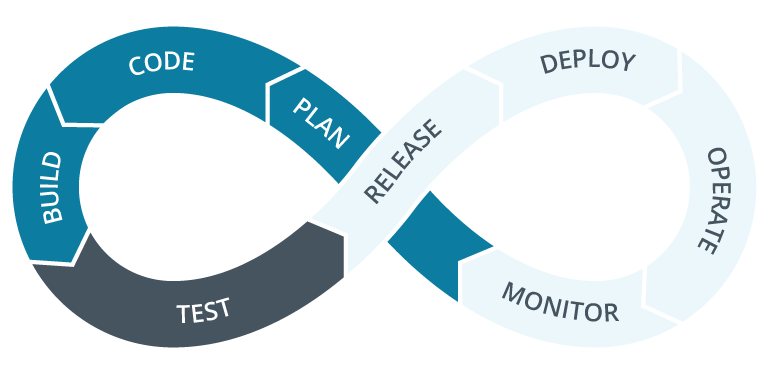

Docker's Role in CI/CD and DevOps

As a platform for developing and deploying applications, Docker plays an essential role in continuous improvement/continuous deployment (CI/CD) methods. One key component of CI/CD pipelines is being able to build and test in isolated, clean containers, ensuring no unexpected dependencies are inserted.

The same images, binaries and sources are used at every stage of this test-drive development approach. Docker deployment scripts are integrated into common pipeline management tools to provide a build > test > production flow. Docker is yet another tool for bridging the gap between development and operations teams in a DevOps environment.

Dockerfiles (covered in more detail below) contain the instructions and configurations necessary to build images. These images are the sources for containers. Since Dockerfiles are simply files of code, it makes good sense to centralize and manage them using the Git version control and repository platform. Many development environments already rely on Git as a single source of truth in a larger DevOps (or GitOps) solution.

Docker Components

Docker consists of several components that work together to provide containerization and integrate containers into your CI/CD pipeline.

Here are some key pieces of the Docker solution:

- Docker Engine: Provides the server, daemon, CLI and API components necessary to host and run containers.

- Dockerfile: Contains instructions for building Docker images.

- Image: Immutable files containing the container code and configuration. An image is a container that is not yet running.

- Container: A running instance of an image, providing an isolated application environment with the potential for networking and storage.

- DockerHub: Image repository with public and private options for developers to store images. CI/CD pipelines can use DockerHub as a source.

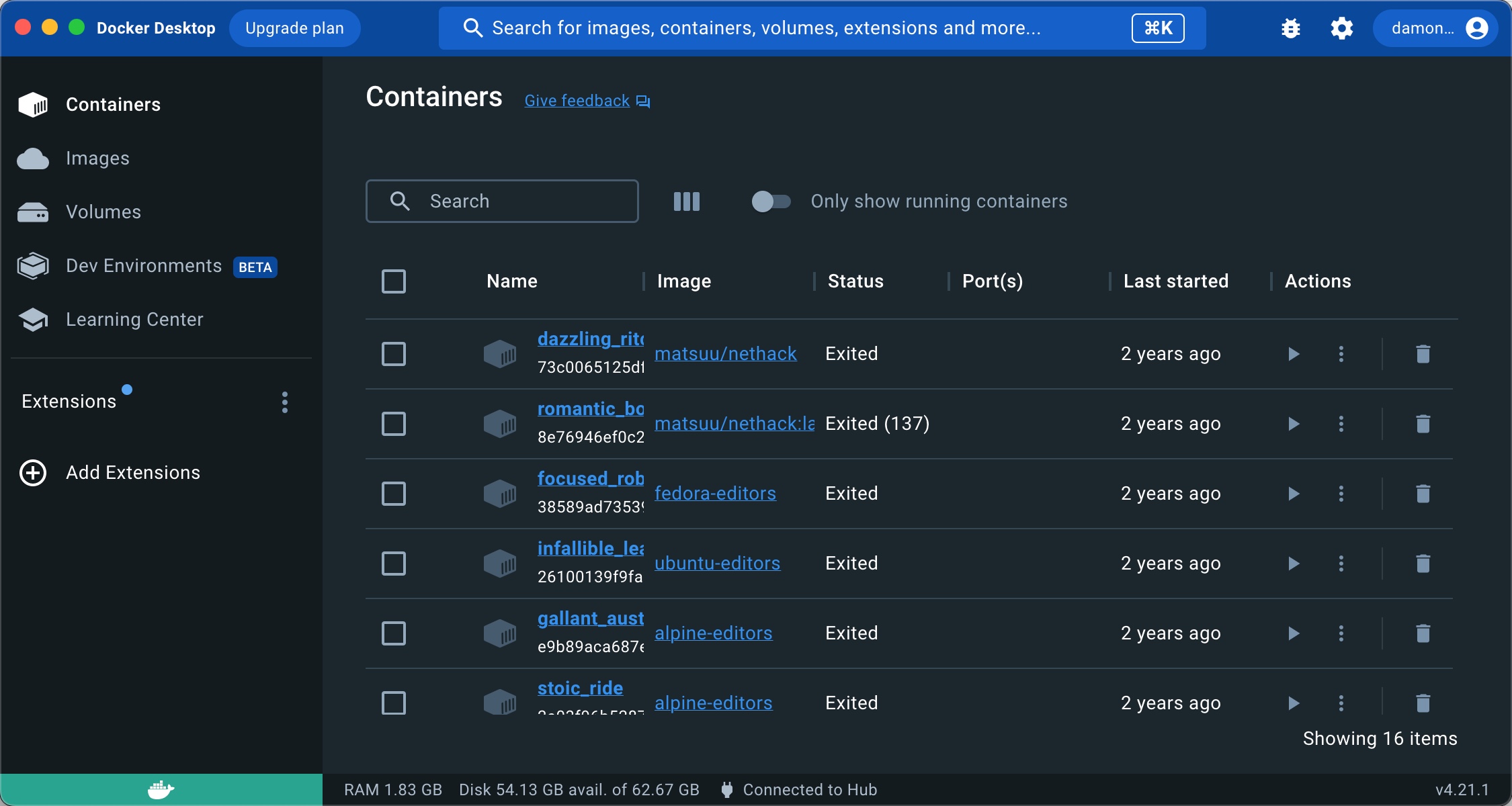

- Docker Desktop: Graphical management interface for Docker containers on Linux, Windows and macOS. Some users will find this more intuitive than the command-line environment.

- Docker compose: YAML file to streamline management of multiple containers in larger projects.

How to Install Docker

Docker runs natively on Linux, so that will be the focus of this section. Windows and macOS users can follow the links to find installation options. There are also instructions for a Raspberry Pi installation.

Depending on your Linux system's configuration, you may need to use sudo for these commands.

Install the Docker container engine on a Fedora Linux system using these commands:

$ sudo dnf -y install dnf-plugins-core

$ sudo dnf config-manager --add-repo https://download.docker.com/linux/fedora/docker-ce.repo

$ sudo dnf install docker-ce docker-ce-cli containerd.io

If you use Ubuntu Linux, you'll need to follow these instructions and use the apt package manager:

$ sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

Note: You will need to set up the Docker repository for the apt package manager. Information for configuring the repo can be found by following the link above.

This command installs the basic Docker components, including the containerd runtime environment, which handles the entire container lifecycle.

Next, start and enable Docker:

$ sudo systemctl start docker

$ sudo systemctl enable docker

Docker Desktop (for Linux, macOS or Windows) is the easiest way to get all the components, but it may not be appropriate for all environments. Still, the desktop is a good way to get started.

You're now ready to run containers on your local system.

Start Using Docker

Once you have installed Docker, you can start working with images and containers. After that, begin building custom images using Dockerfiles.

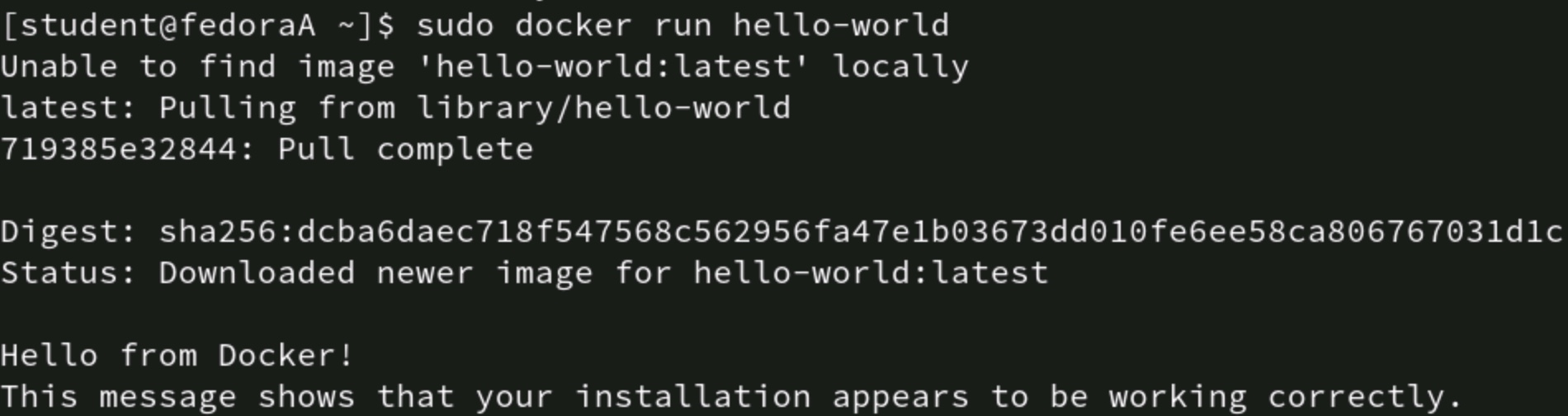

The first step is usually just a simple test using a sample image provided by Docker. Run this hello-world image by using the following command:

$ sudo docker run hello-world

The hello-world image shows you can run containers using Docker. You can now start finding more useful containers.

A common and helpful container to get started with is BusyBox. It contains a collection of utilities that closely emulate the full GNU applications found in a regular Linux distribution. The idea is to provide a simple and functional Linux-like environment for further development. DockerHub shows the most popular images and BusyBox always seems to be there:

Begin by pulling (downloading) the image from a repository:

$ sudo docker pull busybox

List the available images to confirm the pull function worked as expected:

$ sudo docker images

You should see the BusyBox image listed, along with any others you might have pulled (including the test hello-world image).

Next, run the image using the following flags:

$ sudo docker run -it -rm busybox

Note the --it and --rm flags in the above command. Here's an explanation:

| Flag | Description |

| --it | Provides access to an interactive shell, allowing you to run commands in the container |

| --rm | Removes or cleans up container file systems files after the container exits. Useful for test containers |

Since you have an interactive shell on BusyBox, you can begin entering commands to interact with the container.

Try a few common commands, such as those in the table below.

| Command | Description |

| ls | List the contents of a directory |

| ping | ping a remote system |

| vi | Open the vi text editor |

| ps | Display running processes on the system |

You can start and stop existing containers using the related subcommands: start and stop. Note that this differs from using docker run, which launches a new container from an image.

Not only can you stop a container, but you can kill it if it becomes non-responsive using the kill -f option.

Note that managing the Docker platform uses the docker subcommand format but managing individual containers within Docker relies on the docker container subcommand syntax.

For example, to run a new container from an image, type:

$ sudo docker run busybox

To start an existing but stopped container, type:

$ sudo docker container start <container_id>

Display running images by using the docker ps command (usually with the -a option):

$ sudo docker ps -a

This command shows the status of recent containers on the system, giving you an idea of your system's container workload.

The following table summarizes the commands covered above for quick reference:

| Command | Description |

| docker pull | Download an image from a repository |

| docker push | Upload an image to a repository |

| docker build | Create an image from a Dockerfile |

| docker run | Run a container from an image |

| docker images | List available images |

| docker ps | List containers |

| docker container start | Start an existing but stopped container |

| docker container stop | Stop an existing container |

Create an Image With a Dockerfile

What if you want to customize your image? BusyBox is handy, but it only contains certain generic functions. You might need a container with greater capabilities or one that runs your new custom Python app. That's where Dockerfiles come in.

Dockerfiles contain instructions on building an image (recall that containers are running instances of images).

A simple Dockerfile might add a program such as the nano text editor to an existing image.

Here's an example of a very simple Dockerfile you create in a text editor:

FROM busybox

RUN apt-get install -y nano

The lines in the Dockerfile:

- The FROM line indicates the image to be used (BusyBox, in this case).

- The RUN line executes the apt package manager in the container. In this file, it installs the nano text editor.

The next time you run the container, nano is available for managing configuration files.

Some Docker Misconceptions

Most developers and administrators are pretty clear on what Docker is and what it does. Newer folks might be under the misconception that containers replace virtual machines, which is not the case. The two virtualization technologies each have a role in modern IT, sometimes individually and sometimes complementing each other.

One concern some users have is that they'll have to work at the Docker command line all the time. However, Docker Desktop is available for all the major platforms and provides a user-friendly graphical interface. Folks who only work with Docker periodically may find this to be a significant benefit.

Another misconception is that Docker is a Linux-only solution. There is no doubt that Docker is Linux-centric, but there are robust solutions for Windows and macOS. The containers run in a special VM on these platforms, but they do run and provide a viable containerization solution.

Integrate Docker Into Existing Workflows

Docker is the most popular and well-known container solution out there, and it's easy to get started with it. By offering clear and complete documentation, graphical solutions and flexible options, Docker, as a company, makes its container platform very attractive.

Start by understanding some basic Docker terminology and then work with simple containers. Graduate upward to more complex containers and look for ways to integrate Docker into your existing CI/CD workflows.

Ready to upgrade your IT skills? We've got great news! You can save big on CompTIA certifications and training right now.